SKNet¶

ConvNet Image Classification

- class lucid.models.SKNet(block: Module, layers: list[int], num_classes: int = 1000, kernel_sizes: list[int] = [3, 5], base_width: int = 64, cardinality: int = 1, **resnet_args: Any)¶

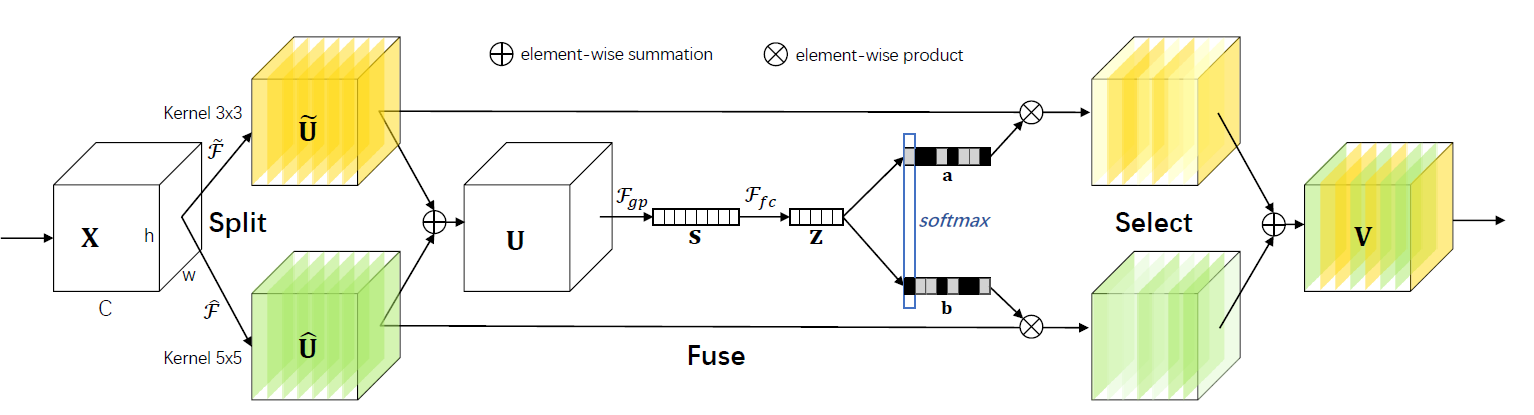

The SKNet class extends the ResNet architecture by incorporating Selective Kernel (SK) blocks, which dynamically adjust receptive fields via attention mechanisms. This enables the network to adaptively fuse multi-scale features, improving performance on tasks involving objects of varying scales.

Class Signature¶

class lucid.nn.SKNet(

block: nn.Module,

layers: list[int],

num_classes: int = 1000,

kernel_sizes: list[int] = [3, 5],

base_width: int = 64,

cardinality: int = 1,

)

Parameters¶

block (nn.Module): The building block module used for the SKNet layers. Typically an SKBlock or compatible block type.

layers (list[int]): Specifies the number of blocks in each stage of the network.

num_classes (int, optional): Number of output classes for the final fully connected layer. Default: 1000.

kernel_sizes (list[int], optional): Specifies the sizes of kernels to be used in the SK blocks for multi-scale processing. Default: [3, 5].

base_width (int, optional): Base width of the feature maps in the SK blocks. Default: 64.

cardinality (int, optional): The number of parallel convolutional groups (grouped convolutions) in the SK blocks. Default: 1.

Attributes¶

kernel_sizes (list[int]): Stores the kernel sizes used in the SK blocks.

base_width (int): Stores the base width of feature maps.

cardinality (int): Stores the number of groups for grouped convolutions.

layers (list[nn.Module]): A list of stages, each containing a sequence of SK blocks.

Forward Calculation¶

The forward pass of the SKNet model includes:

Stem: Initial convolutional layers for feature extraction.

Selective Kernel Stages: Each stage applies a series of SK blocks configured via layers.

Global Pooling: A global average pooling layer reduces spatial dimensions.

Classifier: A fully connected layer maps the features to class scores.

Note

The SKNet is well-suited for tasks requiring multi-scale feature representation.

Increasing the kernel_sizes parameter allows the model to capture features over larger receptive fields.